Week 2 [Fri, Jan 15th] - Topics

Detailed Table of Contents

Guidance for the item(s) below:

Software Engineering → Introduction → Pros and cons

Can explain pros and cons of software engineering

Software engineering: Software Engineering is the application of a systematic, disciplined, quantifiable approach to the development, operation, and maintenance of software" -- IEEE Standard Glossary of Software Engineering Terminology

The following description of the Joys of the Programming Craft was taken from Chapter 1 of the famous book The Mythical Man-Month, by Frederick P. Brooks.

Why is programming fun? What delights may its practitioner expect as his reward?

First is the sheer joy of making things. As the child delights in his mud pie, so the adult enjoys building things, especially things of his own design. I think this delight must be an image of God's delight in making things, a delight shown in the distinctness and newness of each leaf and each snowflake.

Second is the pleasure of making things that are useful to other people. Deep within, you want others to use your work and to find it helpful. In this respect the programming system is not essentially different from the child's first clay pencil holder "for Daddy's office."

Third is the fascination of fashioning complex puzzle-like objects of interlocking moving parts and watching them work in subtle cycles, playing out the consequences of principles built in from the beginning. The programmed computer has all the fascination of the pinball machine or the jukebox mechanism, carried to the ultimate.

Fourth is the joy of always learning, which springs from the nonrepeating nature of the task. In one way or another the problem is ever new, and its solver learns something: sometimes practical, sometimes theoretical, and sometimes both.

Finally, there is the delight of working in such a tractable medium. The programmer, like the poet, works only slightly removed from pure thought-stuff. He builds his castles in the air, from air, creating by the exertion of the imagination. Few media of creation are so flexible, so easy to polish and rework, so readily capable of realizing grand conceptual structures....

Yet the program construct, unlike the poet's words, is real in the sense that it moves and works, producing visible outputs separate from the construct itself. It prints results, draws pictures, produces sounds, moves arms. The magic of myth and legend has come true in our time. One types the correct incantation on a keyboard, and a display screen comes to life, showing things that never were nor could be.

Programming then is fun because it gratifies creative longings built deep within us and delights sensibilities you have in common with all men.

Not all is delight, however, and knowing the inherent woes makes it easier to bear them when they appear.

First, one must perform perfectly. The computer resembles the magic of legend in this respect, too. If one character, one pause, of the incantation is not strictly in proper form, the magic doesn't work. Human beings are not accustomed to being perfect, and few areas of human activity demand it. Adjusting to the requirement for perfection is, I think, the most difficult part of learning to program.

Next, other people set one's objectives, provide one's resources, and furnish one's information. One rarely controls the circumstances of his work, or even its goal. In management terms, one's authority is not sufficient for his responsibility. It seems that in all fields, however, the jobs where things get done never have formal authority commensurate with responsibility. In practice, actual (as opposed to formal) authority is acquired from the very momentum of accomplishment.

The dependence upon others has a particular case that is especially painful for the system programmer. He depends upon other people's programs. These are often maldesigned, poorly implemented, incompletely delivered (no source code or test cases), and poorly documented. So he must spend hours studying and fixing things that in an ideal world would be complete, available, and usable.

The next woe is that designing grand concepts is fun; finding nitty little bugs is just work. With any creative activity come dreary hours of tedious, painstaking labor, and programming is no exception.

Next, one finds that debugging has a linear convergence, or worse, where one somehow expects a quadratic sort of approach to the end. So testing drags on and on, the last difficult bugs taking more time to find than the first.

The last woe, and sometimes the last straw, is that the product over which one has labored so long appears to be obsolete upon (or before) completion. Already colleagues and competitors are in hot pursuit of new and better ideas. Already the displacement of one's thought-child is not only conceived, but scheduled.

This always seems worse than it really is. The new and better product is generally not available when one completes his own; it is only talked about. It, too, will require months of development. The real tiger is never a match for the paper one, unless actual use is wanted. Then the virtues of reality have a satisfaction all their own.

Of course the technological base on which one builds is always advancing. As soon as one freezes a design, it becomes obsolete in terms of its concepts. But implementation of real products demands phasing and quantizing. The obsolescence of an implementation must be measured against other existing implementations, not against unrealized concepts. The challenge and the mission are to find real solutions to real problems on actual schedules with available resources.

This then is programming, both a tar pit in which many efforts have floundered and a creative activity with joys and woes all its own. For many, the joys far outweigh the woes....

Exercises

Guidance for the item(s) below:

Let's learn about those two approaches early so that we can better understand how this module works.

Can explain SDLC process models

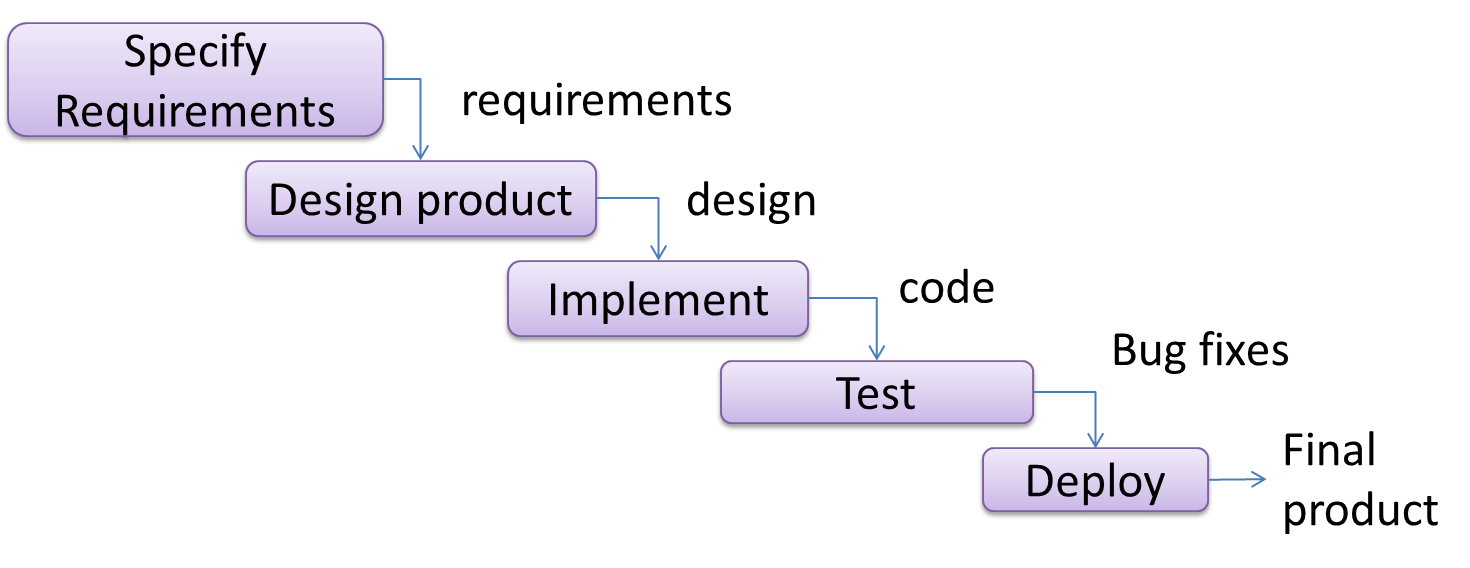

Software development goes through different stages such as requirements, analysis, design, implementation and testing. These stages are collectively known as the software development life cycle (SDLC). There are several approaches, known as software development life cycle models (also called software process models), that describe different ways to go through the SDLC. Each process model prescribes a "roadmap" for the software developers to manage the development effort. The roadmap describes the aims of the development stage(s), the artifacts or outcome of each stage, as well as the workflow i.e. the relationship between stages.

Can explain sequential process models

The sequential model, also called the waterfall model, models software development as a linear process, in which the project is seen as progressing steadily in one direction through the development stages. The name waterfall stems from how the model is drawn to look like a waterfall (see below).

When one stage of the process is completed, it should produce some artifacts to be used in the next stage. For example, upon completion of the requirements stage, a comprehensive list of requirements is produced that will see no further modifications. A strict application of the sequential model would require each stage to be completed before starting the next.

This could be a useful model when the problem statement is well-understood and stable. In such cases, using the sequential model should result in a timely and systematic development effort, provided that all goes well. As each stage has a well-defined outcome, the progress of the project can be tracked with relative ease.

The major problem with this model is that the requirements of a real-world project are rarely well-understood at the beginning and keep changing over time. One reason for this is that users are generally not aware of how a software application can be used without prior experience in using a similar application.

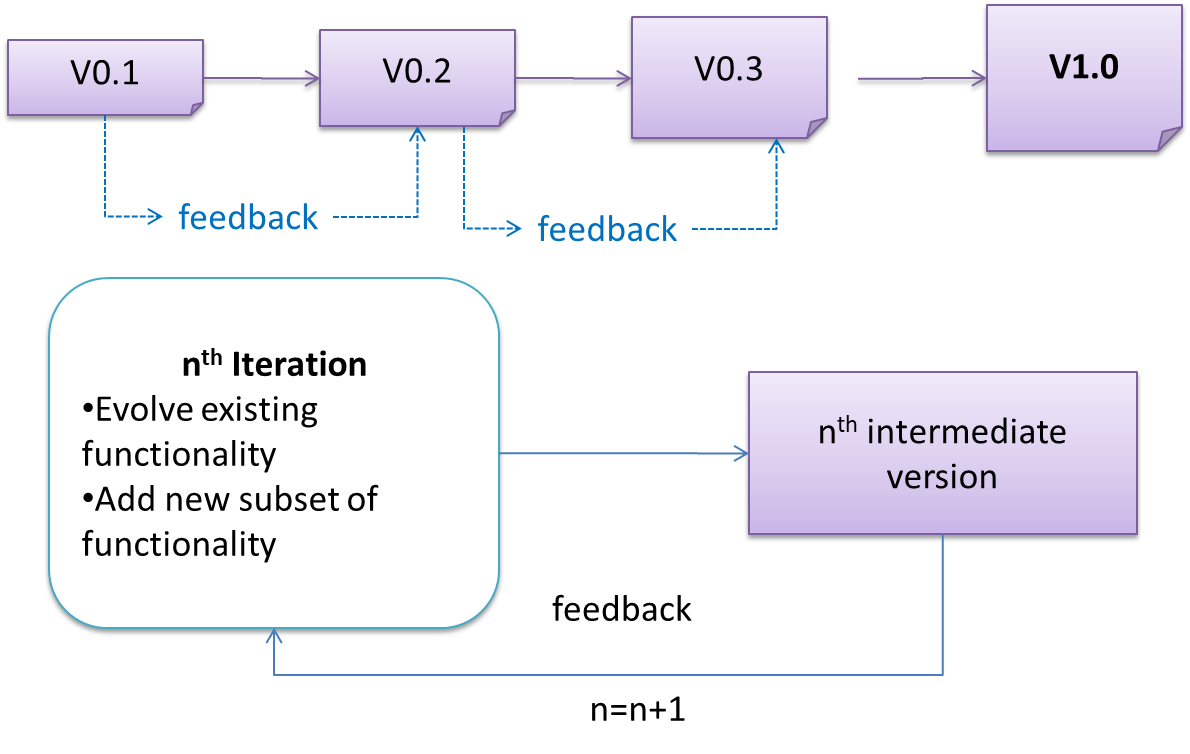

Can explain iterative process models

The iterative model (sometimes called iterative and incremental) advocates having several iterations of SDLC. Each of the iterations could potentially go through all the development stages, from requirements gathering to testing & deployment. Roughly, it appears to be similar to several cycles of the sequential model.

In this model, each of the iterations produces a new version of the product. Feedback on the new version can then be fed to the next iteration. Taking the Minesweeper game as an example, the iterative model will deliver a fully playable version from the early iterations. However, the first iteration will have primitive functionality, for example, a clumsy text based UI, fixed board size, limited randomization, etc. These functionalities will then be improved in later releases.

The iterative model can take a breadth-first or a depth-first approach to iteration planning.

- breadth-first: an iteration evolves all major components in parallel e.g., add a new feature fully, or enhance an existing feature.

- depth-first: an iteration focuses on fleshing out only some components e.g., update the backend to support a new feature that will be added in a future iteration.

Most projects use a mixture of breadth-first and depth-first iterations i.e., an iteration can contain some breadth-first work as well as some depth-first work.

Guidance for the item(s) below:

Let's jump in and learn how to get started using Git in your own computer. Yes, we are now switching our focus to the project management aspect of SE.

Guidance for the item(s) below:

Can explain revision control

Revision control is the process of managing multiple versions of a piece of information. In its simplest form, this is something that many people do by hand: every time you modify a file, save it under a new name that contains a number, each one higher than the number of the preceding version.

Manually managing multiple versions of even a single file is an error-prone task, though, so software tools to help automate this process have long been available. The earliest automated revision control tools were intended to help a single user to manage revisions of a single file. Over the past few decades, the scope of revision control tools has expanded greatly; they now manage multiple files, and help multiple people to work together. The best modern revision control tools have no problem coping with thousands of people working together on projects that consist of hundreds of thousands of files.

Revision control software will track the history and evolution of your project, so you don't have to. For every change, you'll have a log of who made it; why they made it; when they made it; and what the change was.

Revision control software makes it easier for you to collaborate when you're working with other people. For example, when people more or less simultaneously make potentially incompatible changes, the software will help you to identify and resolve those conflicts.

It can help you to recover from mistakes. If you make a change that later turns out to be an error, you can revert to an earlier version of one or more files. In fact, a really good revision control tool will even help you to efficiently figure out exactly when a problem was introduced.

It will help you to work simultaneously on, and manage the drift between, multiple versions of your project. Most of these reasons are equally valid, at least in theory, whether you're working on a project by yourself, or with a hundred other people.

-- [adapted from bryan-mercurial-guide]

RCS: Revision control software are the software tools that automate the process of Revision Control i.e. managing revisions of software artifacts.

Revision: A revision (some seem to use it interchangeably with version while others seem to distinguish the two -- here, let us treat them as the same, for simplicity) is a state of a piece of information at a specific time that is a result of some changes to it e.g., if you modify the code and save the file, you have a new revision (or a version) of that file.

Revision control software are also known as Version Control Software (VCS), and by a few other names.

Exercises

Can explain repositories

Repository (repo for short): The database of the history of a directory being tracked by an RCS software (e.g. Git).

The repository is the database where the meta-data about the revision history are stored. Suppose you want to apply revision control on files in a directory called ProjectFoo. In that case, you need to set up a repo (short for repository) in the ProjectFoo directory, which is referred to as the working directory of the repo. For example, Git uses a hidden folder named .git inside the working directory.

You can have multiple repos in your computer, each repo revision-controlling files of a different working directory, for examples, files of different projects.

Exercises

Guidance for the item(s) below:

The following section gives a specific scenario that includes the steps of initializing a Git repository.

If you are new to Git, you are highly recommended to follow those steps in your own computer to get some hands-on practice as you learn Git usage.

Project Management → Revision Control → Repositories

Can create a local Git repo

Let's take your first few steps in your Git (with GitHub) journey.

0. Take a peek at the full picture(?). Optionally, if you are the sort who prefers to have some sense of the full picture before you get into the nitty-gritty details, watch the video in the panel below:

Git Overview

1. The first step is to install SourceTree, which is Git + a GUI for Git. If you prefer to use Git via the command line (i.e., without a GUI), you can install Git instead.

2. Next, initialize a repository. Let us assume you want to version control content in a specific directory. In that case, you need to initialize a Git repository in that directory. Here are the steps:

Create a directory for the repo (e.g., a directory named things).

Windows: Click File → Clone/New…. Click on Create button.

Mac: New... → Create New Repository.

Enter the location of the directory (Windows version shown below) and click Create.

Go to the things folder and observe how a hidden folder .git has been created.

Windows: you might have to configure Windows Explorer to show hidden files.

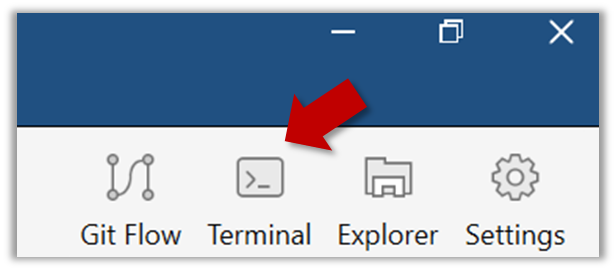

Open a Git Bash Terminal.

If you installed SourceTree, you can click the Terminal button to open a GitBash terminal.

Navigate to the things directory.

Use the command git init which should initialize the repo.

$ git init

Initialized empty Git repository in c:/repos/things/.git/

You can use the command ls -a to view all files, which should show the .git directory that was created by the previous command.

$ ls -a

. .. .git

You can also use the git status command to check the status of the newly-created repo. It should respond with something like the following:

git status

# On branch master

#

# Initial commit

#

nothing to commit (create/copy files and use "git add" to track)

Guidance for the item(s) below:

Can explain saving history

Tracking and ignoring

In a repo, you can specify which files to track and which files to ignore. Some files such as temporary log files created during the build/test process should not be revision-controlled.

Staging and committing

Committing saves a snapshot of the current state of the tracked files in the revision control history. Such a snapshot is also called a commit (i.e. the noun).

When ready to commit, you first stage the specific changes you want to commit. This intermediate step allows you to commit only some changes while saving other changes for a later commit.

Git & GitHub → Init

Can commit using Git

After initializing a repository, Git can help you with revision controlling files inside the working directory. However, it is not automatic. It is up to you to tell Git which of your changes (aka revisions) should be committed to its memory for later use. Saving changes into Git's memory in that way is often called committing and a change saved to the revision history is called a commit.

Working directory: the root directory revision-controlled by Git (e.g., the directory in which the repo was initialized).

Commit (noun): a change (aka a revision) saved in the Git revision history.

(verb): the act of creating a commit i.e., saving a change in the working directory into the Git revision history.

Here are the steps you can follow to learn how to work with Git commits:

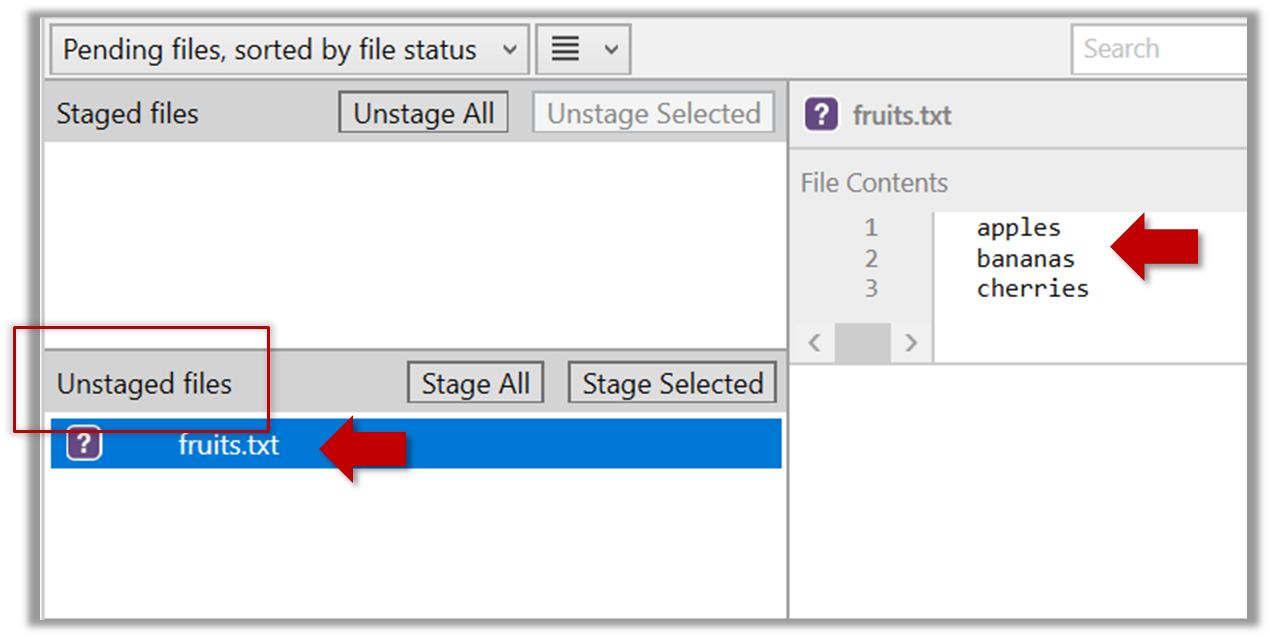

1. Do some changes to the content inside the working directory e.g., create a file named fruits.txt in the things directory and add some dummy text to it.

2. Observe how the file is detected by Git.

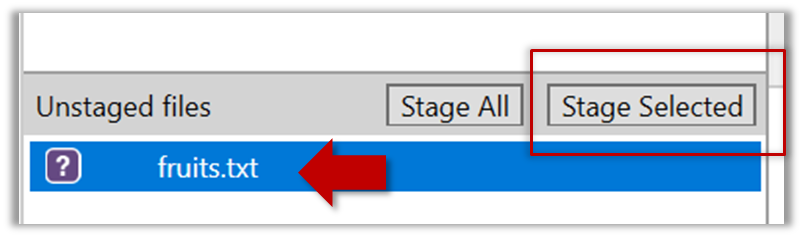

The file is shown as ‘unstaged’.

You can use the git status command to check the status of the working directory.

git status

# On branch master

#

# Initial commit

#

# Untracked files:

# (use "git add <file>..." to include in what will be committed)

#

# a.txt

nothing added to commit but untracked files present (use "git add" to track)

3. Stage the changes to commit: Although Git has detected the file in the working directory, it will not do anything with the file unless you tell it to. Suppose you want to commit the current changes to the file. First, you should stage the file.

Stage (verb): Instructing Git to prepare a file for committing.

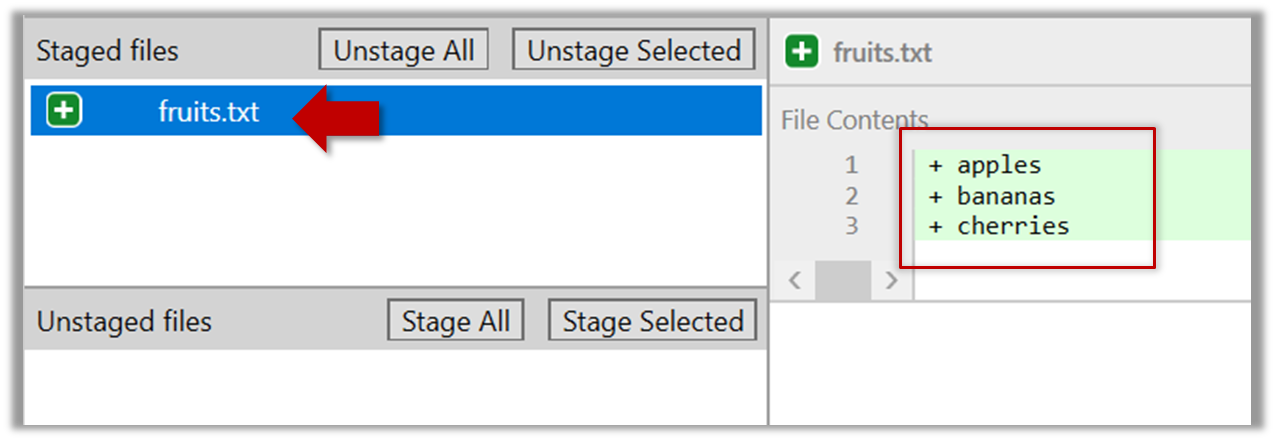

Select the fruits.txt and click on the Stage Selected button.

fruits.txt should appear in the Staged files panel now.

You can use the stage or the add command (they are synonyms, add is the more popular choice) to stage files.

git add fruits.txt

git status

# On branch master

#

# Initial commit

#

# Changes to be committed:

# (use "git rm --cached <file>..." to unstage)

#

# new file: fruits.txt

#

4. Commit the staged version of fruits.txt.

Click the Commit button, enter a commit message e.g. add fruits.txt into the text box, and click Commit.

Use the commit command to commit. The -m switch is used to specify the commit message.

git commit -m "Add fruits.txt"

You can use the log command to see the commit history.

git log

commit 8fd30a6910efb28bb258cd01be93e481caeab846

Author: … < … @... >

Date: Wed Jul 5 16:06:28 2017 +0800

Add fruits.txt

Note the existence of something called the master branch. Git allows you to have multiple branches (i.e. it is a way to evolve the content in parallel) and Git auto-creates a branch named master on which the commits go on by default.

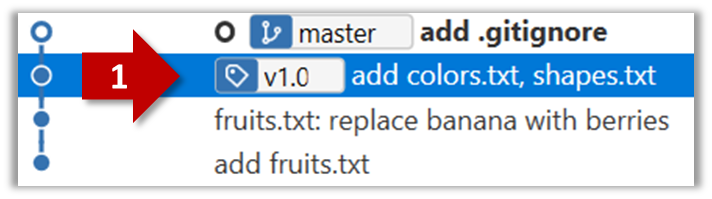

5. Do a few more commits.

Make some changes to

fruits.txt(e.g. add some text and delete some text). Stage the changes, and commit the changes using the same steps you followed before. You should end up with something like this.

Next, add two more files

colors.txtandshapes.txtto the same working directory. Add a third commit to record the current state of the working directory.

6. See the revision graph: Note how commits form a path-like structure aka the revision tree/graph. In the revision graph, each commit is shown as linked to its 'parent' commit (i.e., the commit before it).

To see the revision graph, click on the History item on the menu on the right edge of SourceTree.

The gitk command opens a rudimentary graphical view of the revision graph.

Resources

- Try Git is an online simulation/tutorial of Git basics. You can try its first few steps to solidify what you have learned in this LO.

Revision Control: Saving History

Can set Git to ignore files

Often, there are files inside the Git working folder that you don't want to revision-control e.g., temporary log files. Follow the steps below to learn how to configure Git to ignore such files.

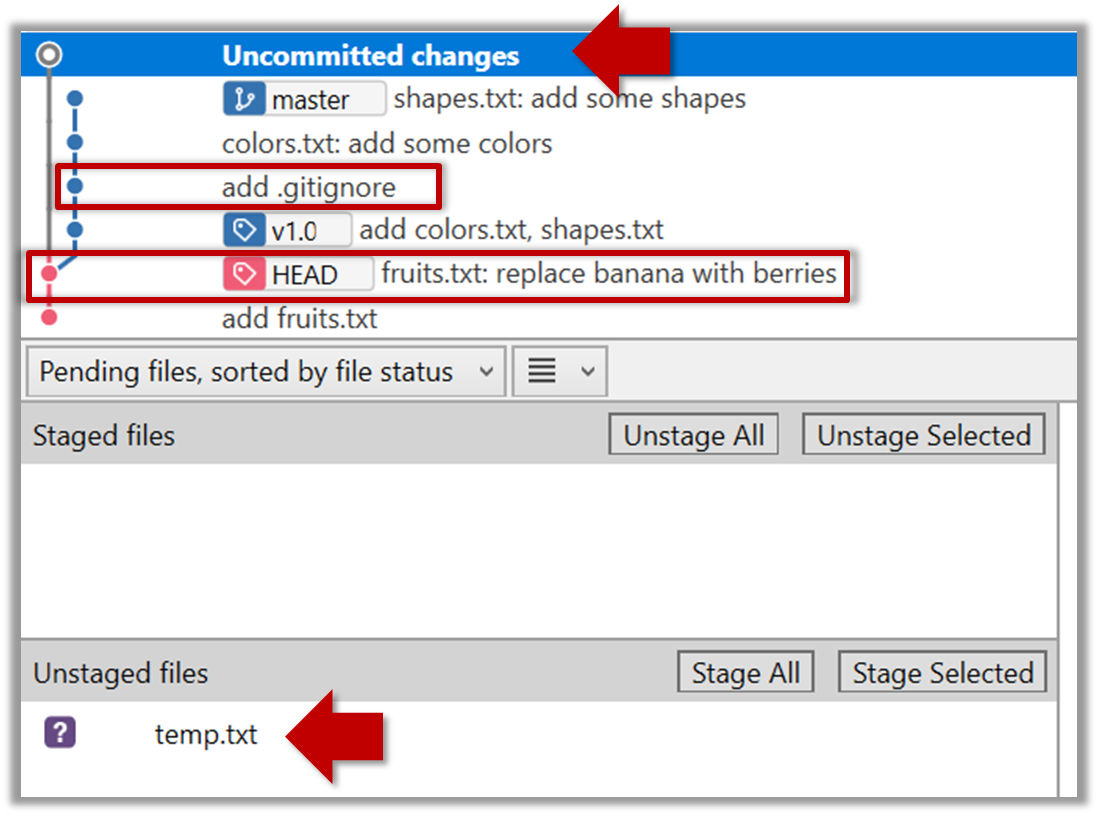

1. Add a file into your repo's working folder that you supposedly don't want to revision-control e.g., a file named temp.txt. Observe how Git has detected the new file.

2. Tell Git to ignore that file:

The file should be currently listed under Unstaged files. Right-click it and choose Ignore…. Choose Ignore exact filename(s) and click OK.

Observe that a file named .gitignore has been created in the working directory root and has the following line in it.

temp.txt

Create a file named .gitignore in the working directory root and add the following line in it.

temp.txt

The .gitignore file

The .gitignore file tells Git which files to ignore when tracking revision history. That file itself can be either revision controlled or ignored.

To version control it (the more common choice – which allows you to track how the

.gitignorefile changes over time), simply commit it as you would commit any other file.To ignore it, follow the same steps you followed above when you set Git to ignore the

temp.txtfile.It supports file patterns e.g., adding

temp/*.tmpto the.gitignorefile prevents Git from tracking any.tmpfiles in thetempdirectory.

More information about the .gitignore file: git-scm.com/docs/gitignore

Can explain using history

RCS tools store the history of the working directory as a series of commits. This means you should commit after each change that you want the RCS to 'remember'.

Each commit in a repo is a recorded point in the history of the project that is uniquely identified by an auto-generated hash e.g. a16043703f28e5b3dab95915f5c5e5bf4fdc5fc1.

You can tag a specific commit with a more easily identifiable name e.g. v1.0.2.

To see what changed between two points of the history, you can ask the RCS tool to diff the two commits in concern.

To restore the state of the working directory at a point in the past, you can checkout the commit in concern. i.e., you can traverse the history of the working directory simply by checking out the commits you are interested in.

RCS: Revision control software are the software tools that automate the process of Revision Control i.e. managing revisions of software artifacts.

Project Management → Revision Control → Saving History

Can tag commits using Git

Each Git commit is uniquely identified by a hash e.g., d670460b4b4aece5915caf5c68d12f560a9fe3e4. As you can imagine, using such an identifier is not very convenient for our day-to-day use. As a solution, Git allows adding a more human-readable tag to a commit e.g., v1.0-beta.

Here's how you can tag a commit in a local repo (e.g. in the samplerepo-things repo):

Right-click on the commit (in the graphical revision graph) you want to tag and choose Tag….

Specify the tag name e.g. v1.0 and click Add Tag.

The added tag will appear in the revision graph view.

To add a tag to the current commit as v1.0,

git tag –a v1.0

To view tags

git tag

To learn how to add a tag to a past commit, go to the ‘Git Basics – Tagging’ page of the git-scm book and refer the ‘Tagging Later’ section.

Remember to push tags to the repo. A normal push does not include tags.

# push a specific tag

git push origin v1.0b

# push all tags

git push origin --tags

After adding a tag to a commit, you can use the tag to refer to that commit, as an alternative to using the hash.

Project Management → Revision Control → Using History

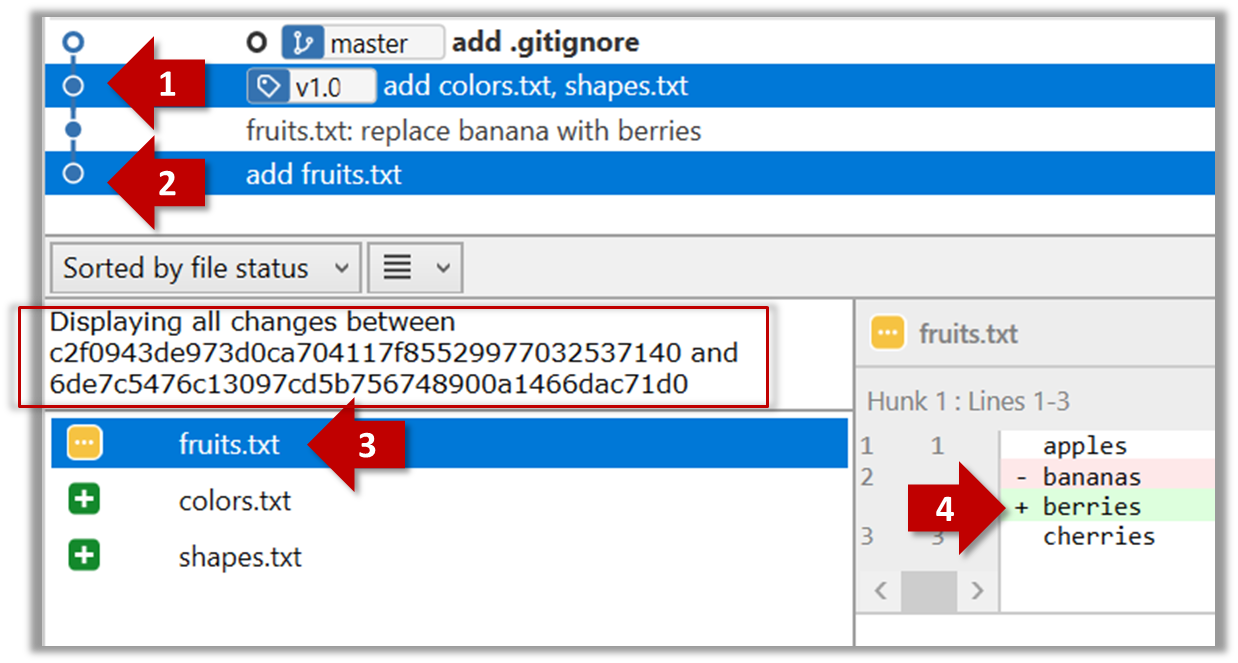

Can compare git revisions

Git can show you what changed in each commit.

To see which files changed in a commit, click on the commit. To see what changed in a specific file in that commit, click on the file name.

git show < part-of-commit-hash >

Example:

git show 251b4cf

commit 5bc0e30635a754908dbdd3d2d833756cc4b52ef3

Author: … < … >

Date: Sat Jul 8 16:50:27 2017 +0800

fruits.txt: replace banana with berries

diff --git a/fruits.txt b/fruits.txt

index 15b57f7..17f4528 100644

--- a/fruits.txt

+++ b/fruits.txt

@@ -1,3 +1,3 @@

apples

-bananas

+berries

cherries

Git can also show you the difference between two points in the history of the repo.

Select the two points you want to compare using Ctrl+Click. The differences between the two selected versions will show up in the bottom half of SourceTree, as shown in the screenshot below.

The same method can be used to compare the current state of the working directory (which might have uncommitted changes) to a point in the history.

The diff command can be used to view the differences between two points of the history.

git diff: shows the changes (uncommitted) since the last commit.git diff 0023cdd..fcd6199: shows the changes between the points indicated by commit hashes.

Note that when using a commit hash in a Git command, you can use only the first few characters (e.g., first 7-10 chars) as that's usually enough for Git to locate the commit.git diff v1.0..HEAD: shows changes that happened from the commit tagged asv1.0to the most recent commit.

Project Management → Revision Control → Using History

Can load a specific version of a Git repo

Git can load a specific version of the history to the working directory. Note that if you have uncommitted changes in the working directory, you need to stash them first to prevent them from being overwritten.

Double-click the commit you want to load to the working directory, or right-click on that commit and choose Checkout....

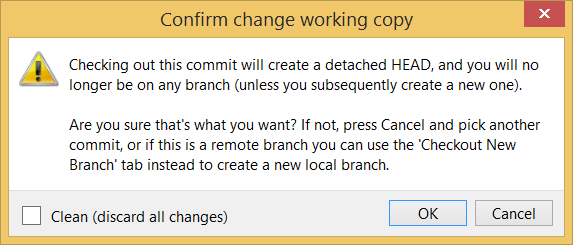

Click OK to the warning about ‘detached HEAD’ (similar to below).

The specified version is now loaded to the working folder, as indicated by the HEAD label. HEAD is a reference to the currently checked out commit.

If you checkout a commit that comes before the commit in which you added the .gitignore file, Git will now show ignored files as ‘unstaged modifications’ because at that stage Git hasn’t been told to ignore those files.

To go back to the latest commit, double-click it.

Use the checkout <commit-identifier> command to change the working directory to the state it was in at a specific past commit.

git checkout v1.0: loads the state as at commit taggedv1.0git checkout 0023cdd: loads the state as at commit with the hash0023cddgit checkout HEAD~2: loads the state that is 2 commits behind the most recent commit

For now, you can ignore the warning about ‘detached HEAD’.

Guidance for the item(s) below:

Can explain remote repositories

Remote repositories are repos that are hosted on remote computers and allow remote access. They are especially useful for sharing the revision history of a codebase among team members of a multi-person project. They can also serve as a remote backup of your codebase.

It is possible to set up your own remote repo on a server, but the easier option is to use a remote repo hosting service such as GitHub or BitBucket.

You can clone a repo to create a copy of that repo in another location on your computer. The copy will even have the revision history of the original repo i.e., identical to the original repo. For example, you can clone a remote repo onto your computer to create a local copy of the remote repo.

When you clone from a repo, the original repo is commonly referred to as the upstream repo. A repo can have multiple upstream repos. For example, let's say a repo repo1 was cloned as repo2 which was then cloned as repo3. In this case, repo1 and repo2 are upstream repos of repo3.

You can pull from one repo to another, to receive new commits in the second repo, if the repos have a shared history. Let's say some new commits were added to the upstream repo is a term used to refer to the repo you cloned fromupstream repo after you cloned it and you would like to copy over those new commits to your own clone i.e., sync your clone with the upstream repo. In that case, you pull from the upstream repo to your clone.

You can push new commits in one repo to another repo which will copy the new commits onto the destination repo. Note that pushing to a repo requires you to have write-access to it. Furthermore, you can push between repos only if those repos have a shared history among them (i.e., one was created by copying the other at some point in the past).

Cloning, pushing, and pulling can be done between two local repos too, although it is more common for them to involve a remote repo.

A repo can work with any number of other repositories as long as they have a shared history e.g., repo1 can pull from (or push to) repo2 and repo3 if they have a shared history between them.

A fork is a remote copy of a remote repo. As you know, cloning creates a local copy of a repo. In contrast, forking creates a remote copy of a Git repo hosted on GitHub. This is particularly useful if you want to play around with a GitHub repo but you don't have write permissions to it; you can simply fork the repo and do whatever you want with the fork as you are the owner of the fork.

A pull request (PR for short) is a mechanism for contributing code to a remote repo, i.e., "I'm requesting you to pull my proposed changes to your repo". For this to work, the two repos must have a shared history. The most common case is sending PRs from a fork to its upstream repo is a repo you forked fromupstream repo.

Here is a scenario that includes all the concepts introduced above (click inside the slide to advance the animation):

Project Management → Revision Control → Remote Respositories

Can clone a remote repo

Given below is an example scenario you can try yourself to learn Git cloning.

Suppose you want to clone the sample repo samplerepo-things to your computer.

Note that the URL of the GitHub project is different from the URL you need to clone a repo in that GitHub project. e.g.

GitHub project URL: https://github.com/se-edu/samplerepo-things

Git repo URL: https://github.com/se-edu/samplerepo-things.git (note the .git at the end)

File → Clone / New… and provide the URL of the repo and the destination directory.

Tools → Git & GitHub → Clone

Can pull changes from a repo

Here's a scenario you can try in order to learn how to pull commits from another repo to yours.

1. Clone a repo (e.g., the repo used in [Git & GitHub → Clone]) to be used for this activity.

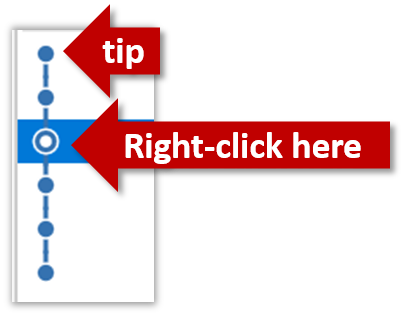

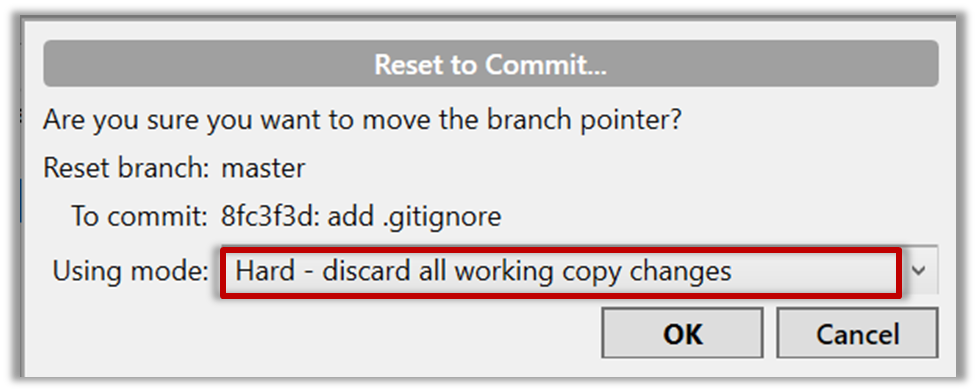

2. Delete the last few commits to simulate cloning the repo a few commits ago.

Right-click the target commit (i.e. the commit that is 2 commits behind the tip) and choose Reset current branch to this commit.

Choose the Hard - … option and click OK.

This is what you will see.

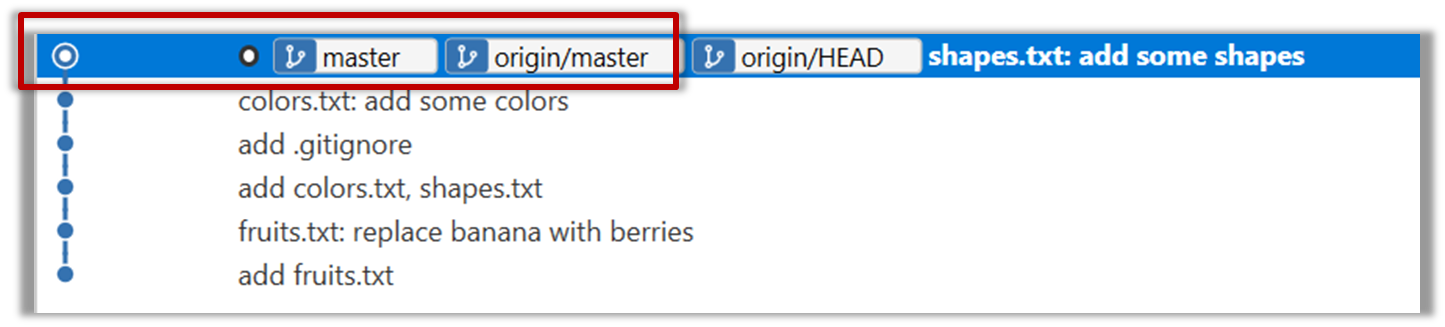

Note the following (cross refer the screenshot above):

Arrow marked as a: The local repo is now at this commit, marked by the master label.

Arrow marked as b: The origin/master label shows what is the latest commit in the master branch in the remote repo.

Use the reset command to delete commits at the tip of the revision history.

git reset --hard HEAD~2

Now, your local repo state is exactly how it would be if you had cloned the repo 2 commits ago, as if somebody has added two more commits to the remote repo since you cloned it.

3. Pull from the other repo: To get those missing commits to your local repo (i.e. to sync your local repo with upstream repo) you can do a pull.

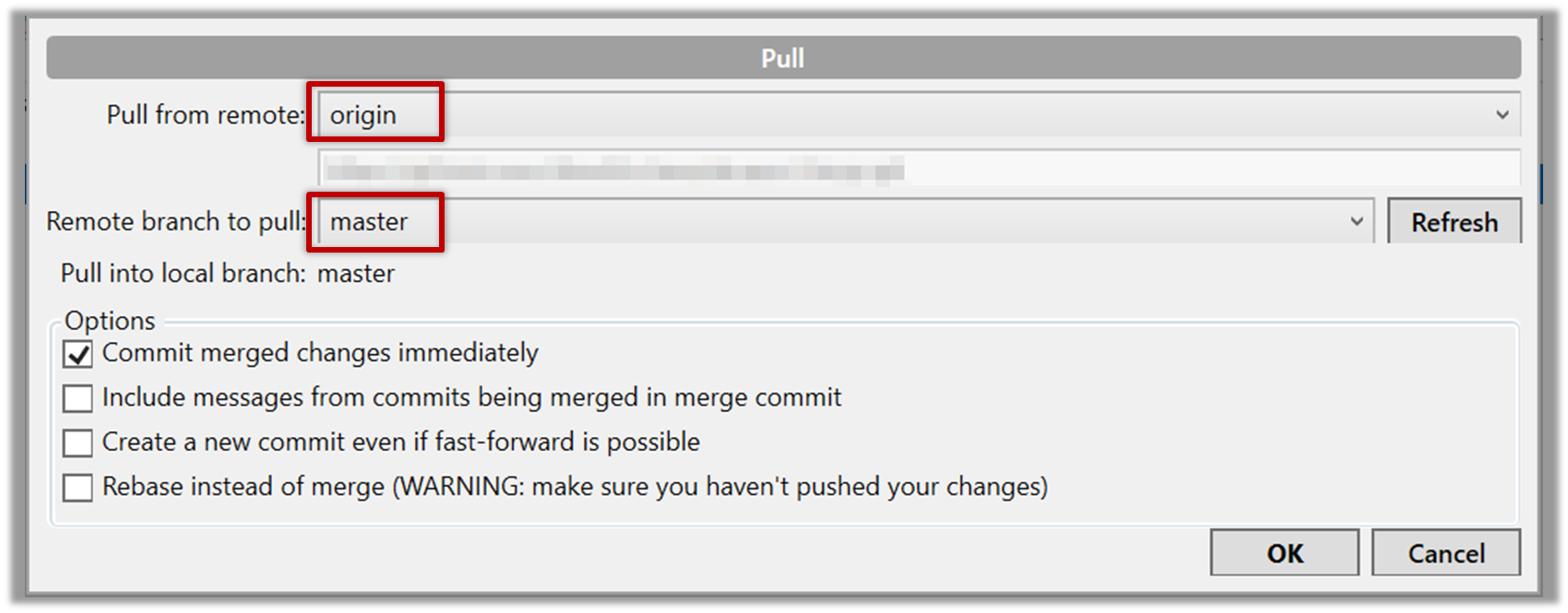

Click the Pull button in the main menu, choose origin and master in the next dialog, and click OK.

Now you should see something like this where master and origin/master are both pointing the same commit.

git pull origin

You can also do a fetch instead of a pull in which case the new commits will be downloaded to your repo but the working directory will remain at the current commit. To move the current state to the latest commit that was downloaded, you need to do a merge. A pull is a shortcut that does both those steps in one go.

Working with multiple remotes

When you clone a repo, Git automatically adds a remote repo named origin to your repo configuration. As you know, you can pull commits from that repo. As you know, a Git repo can work with remote repos other than the one it was cloned from.

To communicate with another remote repo, you can first add it as a remote of your repo. Here is an example scenario you can follow to learn how to pull from another repo:

Open the local repo in SourceTree. Suggested: Use your local clone of the

samplerepo-thingsrepo.Choose

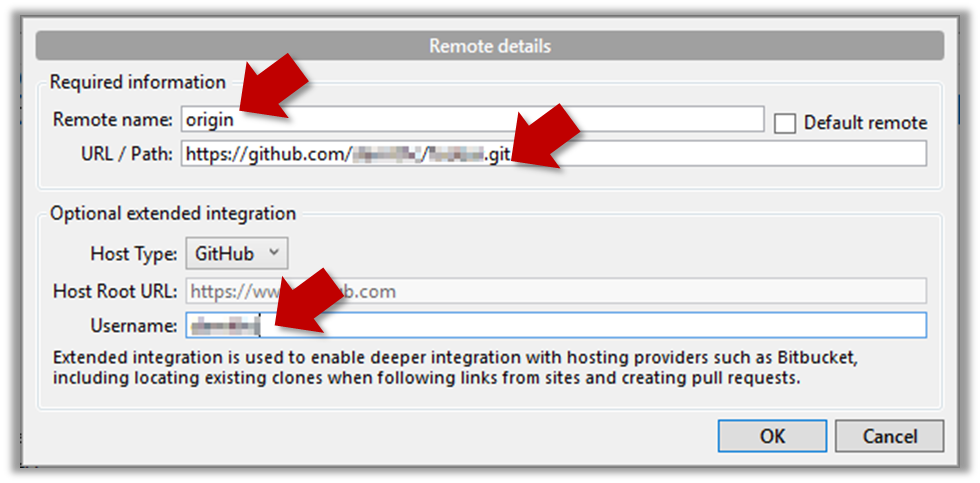

Repository→Repository Settingsmenu option.Add a new remote to the repo with the following values.

Remote name: the name you want to assign to the remote repo e.g.,upstream1URL/path: the URL of your repo (ending in.git) that. Suggested:https://github.com/se-edu/samplerepo-things-2.git(samplerepo-things-2is another repo that has a shared history withsamplerepo-things)Username: your GitHub username

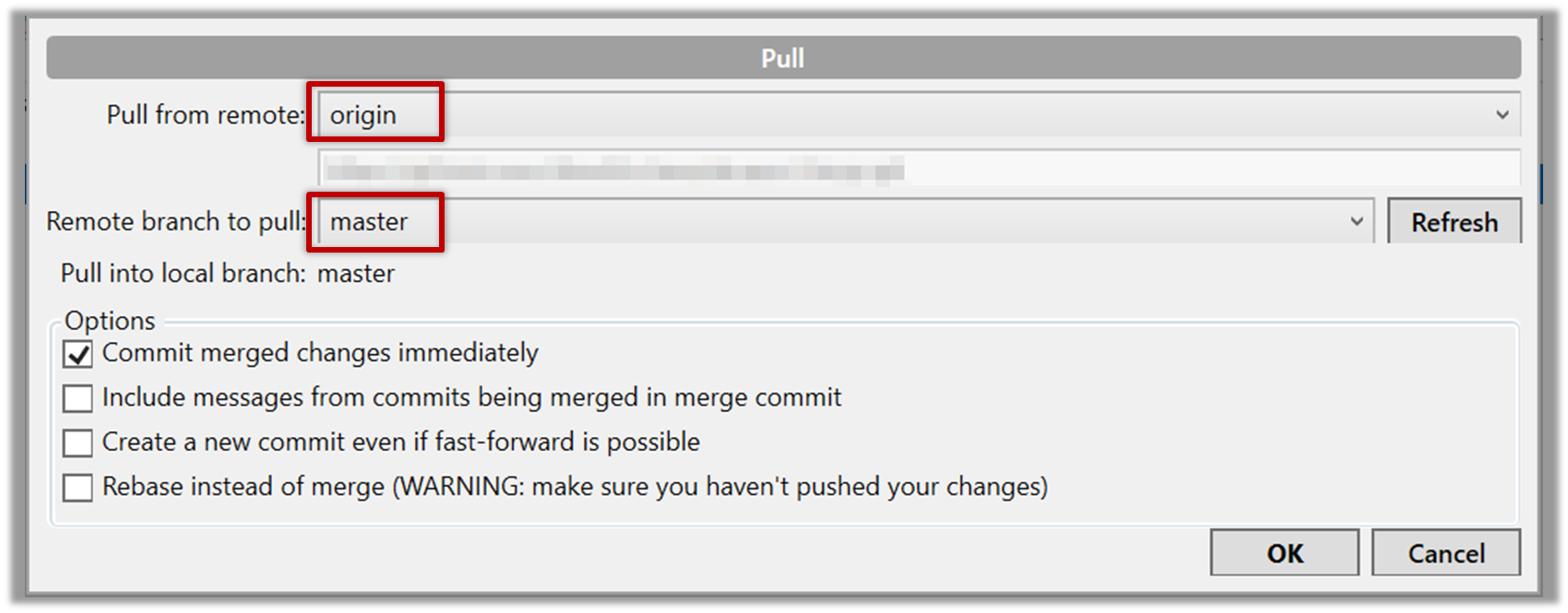

Now, you can pull from the added repo as you did before but choose the remote name of the repo you want to pull from (instead of

origin):

If the

Remote branch to pulldropdown is empty, click theRefreshbutton on its right.If the pull from the

samplerepo-things-2was successful, you should have received one more commit into your local repo.

Navigate to the folder containing the local repo.

Set the new remote repo as a remote of the local repo.

command:git remote add {remote_name} {remote_repo_url}

e.g.,git remote add upstream1 https://github.com/johndoe/foobar.gitNow you can pull from the new remote.

e.g.,git pull upstream1 master

Tools → Git & GitHub → Pull

Can fork a repo

Given below is a scenario you can try in order to learn how to fork a repo:.

0. Create a GitHub account if you don't have one yet.

1. Go to the GitHub repo you want to fork e.g., samplerepo-things

2. Click on the ![]() button on the top-right corner. In the next step, choose to fork to your own account or to another GitHub organization that you are an admin of.

button on the top-right corner. In the next step, choose to fork to your own account or to another GitHub organization that you are an admin of.

GitHub does not allow you to fork the same repo more than once to the same destination. If you want to re-fork, you need to delete the previous fork.

Tools → Git & GitHub → Pull

Can push to a remote repo

Given below is a scenario you can try in order to learn how to push commits to a remote repo hosted on GitHub:

1. Fork an existing GitHub repo (e.g., samplerepo-things) to your GitHub account.

2. Clone the fork (not the original) to your computer.

3. Commit some changes in your local repo.

4. Push the new commits to your fork on GitHub

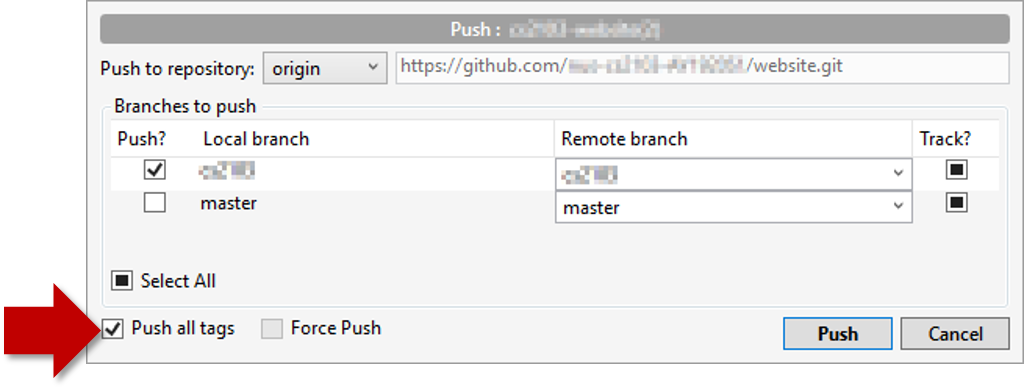

Click the Push button on the main menu, ensure the settings are as follows in the next dialog, and click the Push button on the dialog.

Tags are not included in a normal push. Remember to tick Push all tags when pushing to the remote repo if you want them to be pushed to the repo.

Use the command git push origin master. Enter your Github username and password when prompted.

Tags are not included in a normal push. To push a tag, use this command: git push origin <tag_name> e.g. git push origin v1.0

You can push to repos other than the one you cloned from, as long as the target repo and your repo have a shared history.

- Add the GitHub repo URL as a remote, if you haven't done so already.

- Push to the target repo.

Push your repo to the new remote the usual way, but select the name of target remote instead of origin and remember to select the Track checkbox.

Push to the new remote the usual way e.g., git push upstream1 master (assuming you gave the name upstream1 to the remote).

You can even push an entire local repository to GitHub, to form an entirely new remote repository. For example, you created a local repo and worked with it for a while but now you want to upload it onto GitHub (as a backup or to share it with others). The steps are given below.

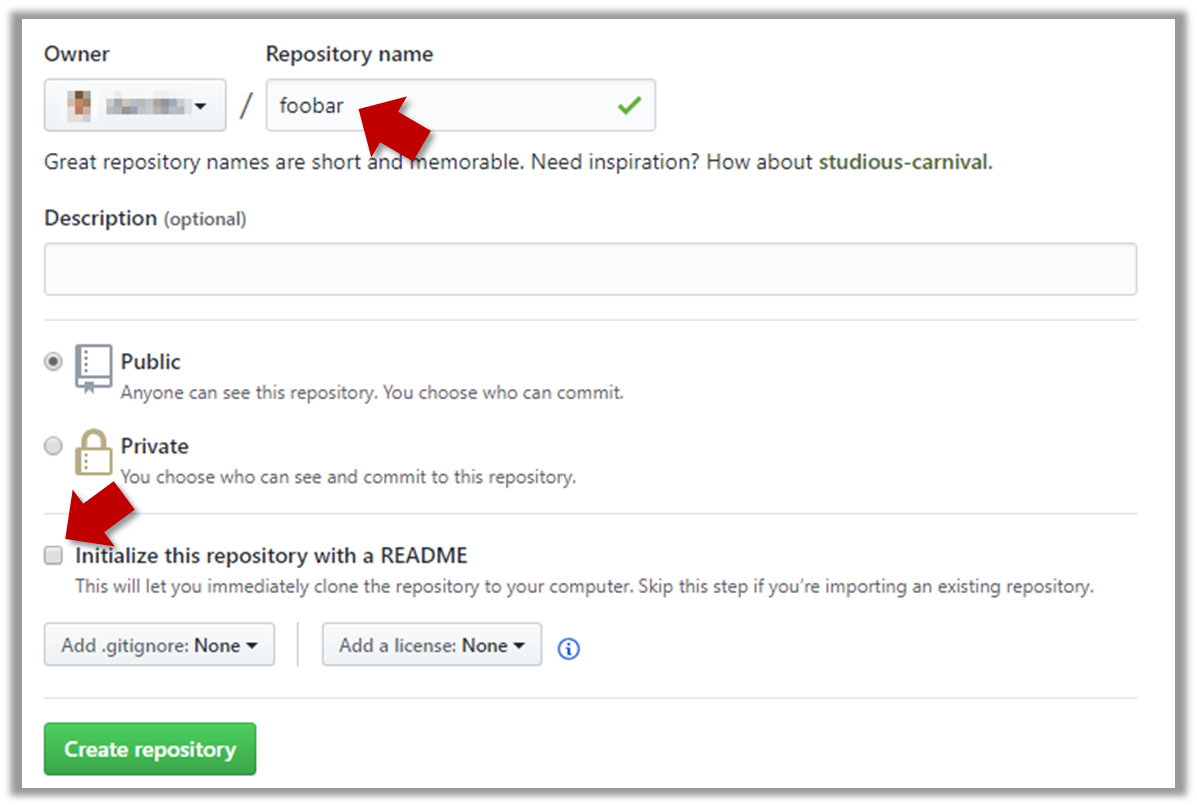

1. Create an empty remote repo on GitHub.

Login to your GitHub account and choose to create a new Repo.

In the next screen, provide a name for your repo but keep the

Initialize this repo ...tick box unchecked.

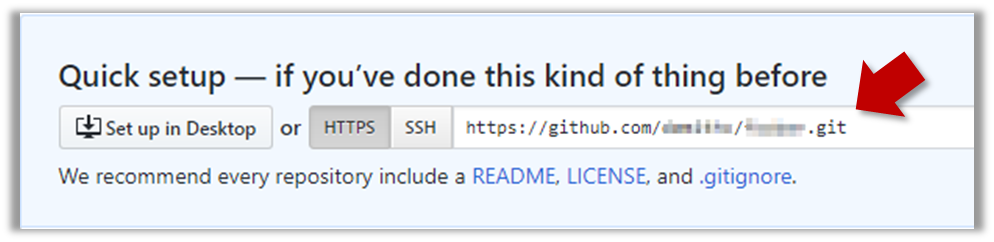

Note the URL of the repo. It will be of the form

https://github.com/{your_user_name}/{repo_name}.git.

e.g.,https://github.com/johndoe/foobar.git(note the.gitat the end)

2. Add the GitHub repo URL as a remote of the local repo. You can give it the name origin (or any other name).

3. Push the repo to the remote.

Push each branch to the new remote the usual way but use the -u flag to inform Git that you wish to i.e., remember which branch in the remote repo corresponds to which branch in the local repotrack the branch.

e.g., git push -u origin master

Guidance for the item(s) below:

🤔 In case you are puzzled by the sudden change of topic, it's because we take an iterative approach to covering topics, as explained in the panel below:

Can explain IDEs

Professional software engineers often write code using Integrated Development Environments (IDEs). IDEs support most development-related work within the same tool (hence, the term integrated).

An IDE generally consists of:

- A source code editor that includes features such as syntax coloring, auto-completion, easy code navigation, error highlighting, and code-snippet generation.

- A compiler and/or an interpreter (together with other build automation support) that facilitates the compilation/linking/running/deployment of a program.

- A debugger that allows the developer to execute the program one step at a time to observe the run-time behavior in order to locate bugs.

- Other tools that aid various aspects of coding e.g. support for automated testing, drag-and-drop construction of UI components, version management support, simulation of the target runtime platform, and modeling support.

Examples of popular IDEs:

- Java: Eclipse, Intellij IDEA, NetBeans

- C#, C++: Visual Studio

- Swift: XCode

- Python: PyCharm

Some web-based IDEs have appeared in recent times too e.g., Amazon's Cloud9 IDE.

Some experienced developers, in particular those with a UNIX background, prefer lightweight yet powerful text editors with scripting capabilities (e.g. Emacs) over heavier IDEs.

Exercises

Guidance for the item(s) below:

This also means we are not switching focus from the implementation aspect to the testing aspect of SE.

Can explain testing

Testing: Operating a system or component under specified conditions, observing or recording the results, and making an evaluation of some aspect of the system or component. –- source: IEEE

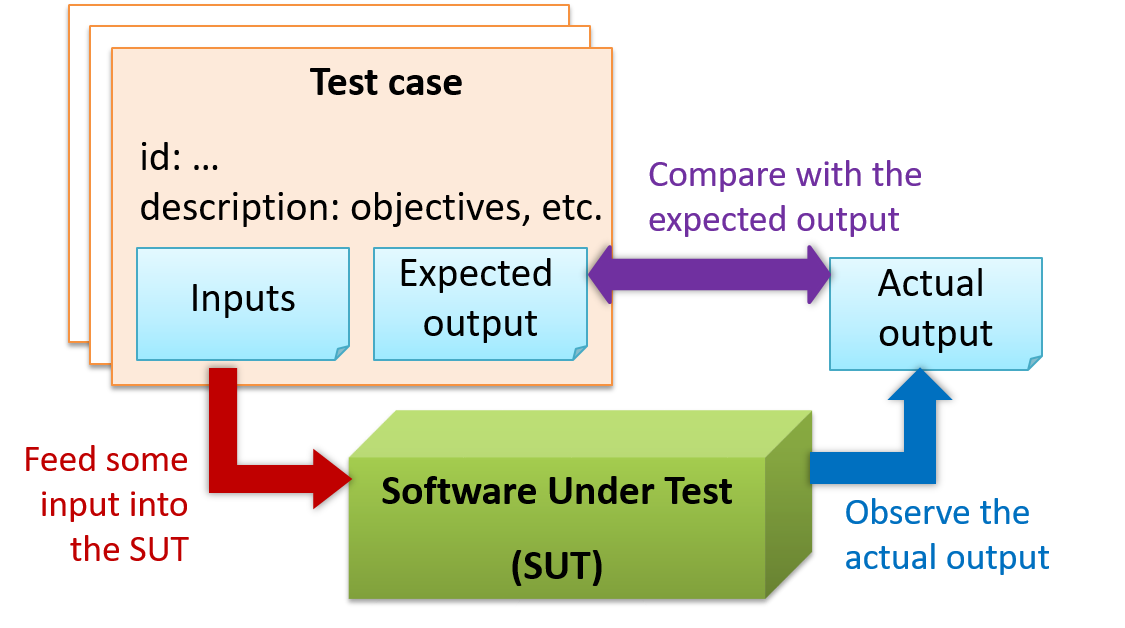

When testing, you execute a set of test cases. A test case specifies how to perform a test. At a minimum, it specifies the input to the software under test (SUT) and the expected behavior.

Example: A minimal test case for testing a browser:

- Input – Start the browser using a blank page (vertical scrollbar disabled). Then, load

longfile.htmllocated in thetest datafolder. - Expected behavior – The scrollbar should be automatically enabled upon loading

longfile.html.

Test cases can be determined based on the specification, reviewing similar existing systems, or comparing to the past behavior of the SUT.

For each test case you should do the following:

- Feed the input to the SUT

- Observe the actual output

- Compare actual output with the expected output

A test case failure is a mismatch between the expected behavior and the actual behavior. A failure indicates a potential defect (or a bug), unless the error is in the test case itself.

Example: In the browser example above, a test case failure is implied if the scrollbar remains disabled after loading longfile.html. The defect/bug causing that failure could be an uninitialized variable.

Exercises

Can explain regression testing

When you modify a system, the modification may result in some unintended and undesirable effects on the system. Such an effect is called a regression.

Regression testing is the re-testing of the software to detect regressions. Note that to detect regressions, you need to retest all related components, even if they had been tested before.

Regression testing is more effective when it is done frequently, after each small change. However, doing so can be prohibitively expensive if testing is done manually. Hence, regression testing is more practical when it is automated.

Exercises

Can explain test automation

An automated test case can be run programmatically and the result of the test case (pass or fail) is determined programmatically. Compared to manual testing, automated testing reduces the effort required to run tests repeatedly and increases precision of testing (because manual testing is susceptible to human errors).

Resources

Can semi-automate testing of CLIs

A simple way to semi-automate testing of a CLI (Command Line Interface) app is by using input/output re-direction.

- First, you feed the app with a sequence of test inputs that is stored in a file while redirecting the output to another file.

- Next, you compare the actual output file with another file containing the expected output.

Let's assume you are testing a CLI app called AddressBook. Here are the detailed steps:

Store the test input in the text file

input.txt.Example

input.txtStore the output you expect from the SUT in another text file

expected.txt.Example

expected.txtRun the program as given below, which will redirect the text in

input.txtas the input toAddressBookand similarly, will redirect the output of AddressBook to a text fileoutput.txt. Note that this does not require any code changes toAddressBook.java AddressBook < input.txt > output.txtThe way to run a CLI program differs based on the language.

e.g., In Python, assuming the code is inAddressBook.pyfile, use the command

python AddressBook.py < input.txt > output.txtIf you are using Windows, use a normal command window to run the app, not a PowerShell window.

Next, you compare

output.txtwith theexpected.txt. This can be done using a utility such as Windows'FC(i.e. File Compare) command, Unix'sdiffcommand, or a GUI tool such as WinMerge.FC output.txt expected.txt

Note that the above technique is only suitable when testing CLI apps, and only if the exact output can be predetermined. If the output varies from one run to the other (e.g. it contains a time stamp), this technique will not work. In those cases, you need more sophisticated ways of automating tests.

CLI application: An application that has a Command Line Interface. i.e. user interacts with the app by typing in commands.

Follow up notes for the item(s) above:

Congrats! You've made it to the end of this week's topics. It feels like a lot right now but now that we got an early start, this stuff will be second nature to you by the time you are done with the semester. 😃